How a research lab saved hundreds of hours of work and digitized everything in Grist

In 2024, David Fabijan ran out of printer paper.

It started as an inconvenience: he needed to print questionnaires for his coworkers at the Jozef Stefan Institute to fill out by hand, and then pass it on to a poor soul to manually retype into Excel.

The underlying issue was clearly more fundamental.

“That sounds like a last century sort of issue,” David recalled.

A year later, when the day came for a brand new, cutting-edge new lab and over 1,500 diverse materials to catalog, that paper shortage forced a larger question: could David usher his lab operations into the 21st century without the security risks, switching costs, platform lock-in, and shaky adoption rates that usually come with it?

The LIMS problem

The Advanced Materials Department at Jozef Stefan Institute is made up of 20 researchers, 6 support staff, and 10 students working across methodologically diverse domains – from catalytic nanoparticles to green hydrogen production, glass foam insulation to 3D printed ceramics, and pulsed laser deposition on thin films to materials that detect explosives. This diversity makes for both excellent science and absolute chaos when it comes to keeping sane data practices. So they tried the usual suspects, like laboratory information management systems (LIMS) and other lab management platforms.

None of them worked.

“Lab management software is in general, awful,” David explained. “They’re either completely useless or useful in a very specific setting, If you have a lab that deals with 10 different topics, it’s just not dynamic enough to actually do the work.”

“Lab management software is in general, awful. They’re either completely useless or useful in a very specific setting.”

–David Fabijan, Technical Expert in Complex Dielectric Permittivity Measurements (or self-proclaimed Lab Monkey)

The problem with a typical LIMS is that its designed for labs with standardized, repetitive workflows. Multi-topic research environments like Jozef Stefan Institute needed something more flexible. As such, their software wishlist got pretty specific pretty fast: self-hosted (for privacy and compliance), open-source (for price hike avoidance and flexibility), Python-friendly (because that’s what many researchers prefer), API-driven (to connect everything), and adaptable enough to keep up with ~10 wildly different research workflows. The system also needed to interface with legacy instrumentation from the 1970s alongside current equipment.

A big ask, but after a simple Google search, they found Grist.

Building a digital lab, from post-it notes to Grist

Rather than addressing a single problem, David systematically rebuilt the department’s operational infrastructure: chemical inventory, safety documentation, instrument data management, publication tracking, and reporting workflows. Oftentimes salvaging processes and data from the post-it notes off fellow researchers’ desks, the implementation addresses several distinct operational domains.

1. Chemical inventory and safety compliance

Managing ~1,500 chemical reagents presented both safety and traceability challenges. The previous system relied on manual record-keeping, which created gaps in custody documentation and complicated safety audits.

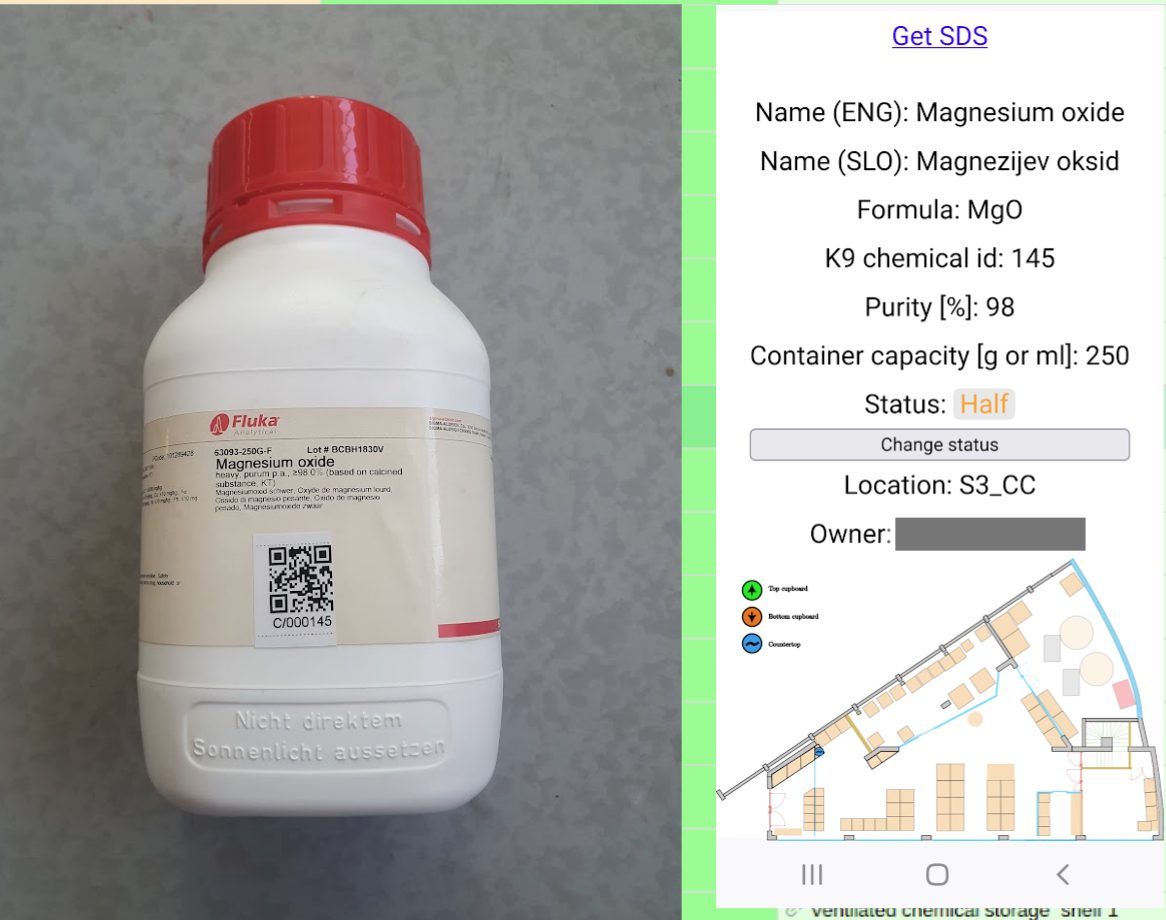

The Grist implementation centers on a searchable chemical database integrated with a QR code generator. Lab members use a mobile interface to scan labels and retrieve compound information, including location, quantity, safety data sheets (SDS), and hazard classifications. An automated webhook also parses the uploaded SDS to extract hazard information through regex pattern matching.

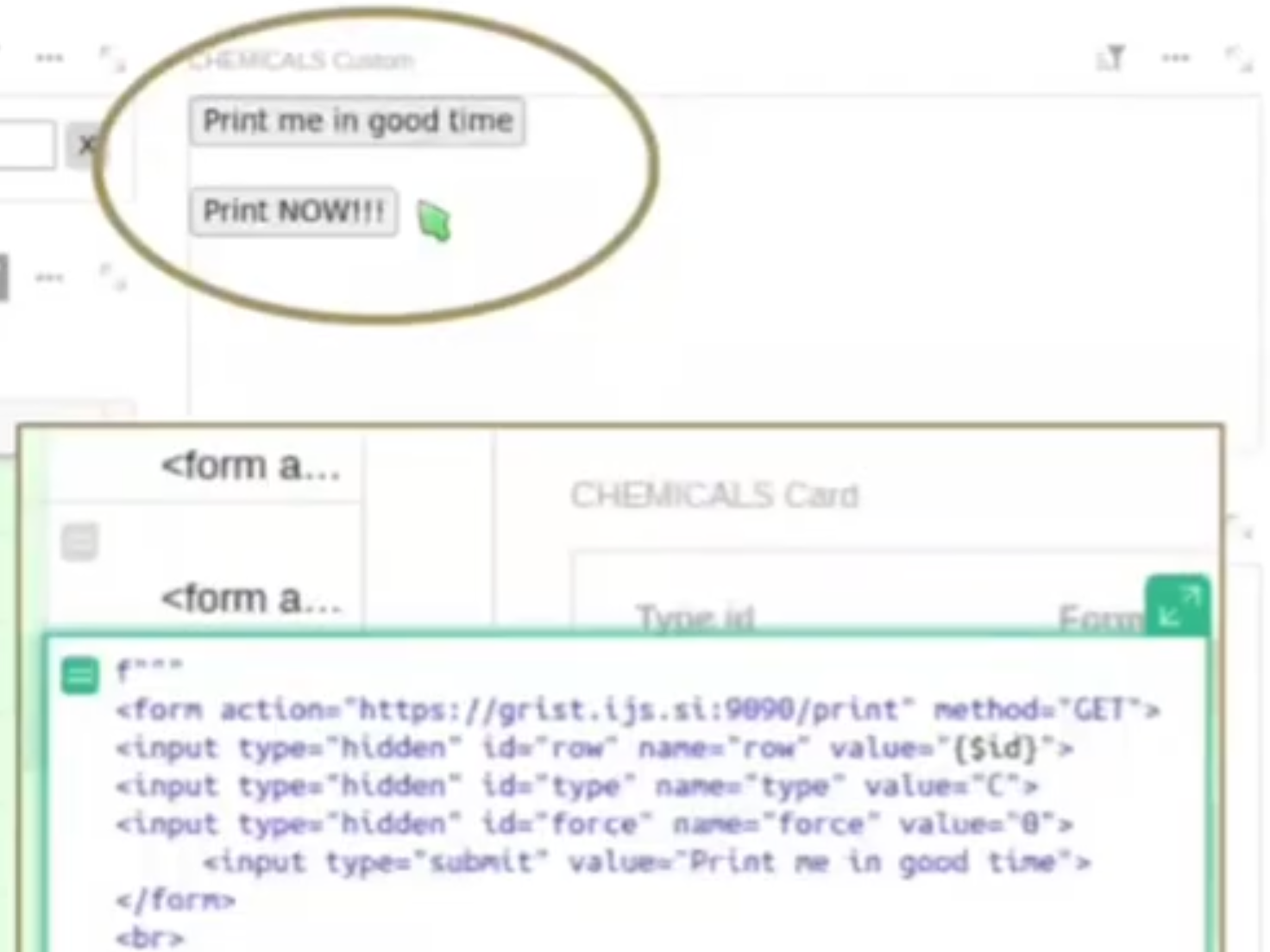

A custom print widget using HTML and Grist’s REST API to generate a QR code label containing safety data, general facts, and the location of any chemical in the lab.

The system provides instant access to safety data, streamlines audit preparation, and establishes clear custody chains for all chemical inventory, all while saving researchers “hundreds of man hours in the end.”

2. Administrative automation

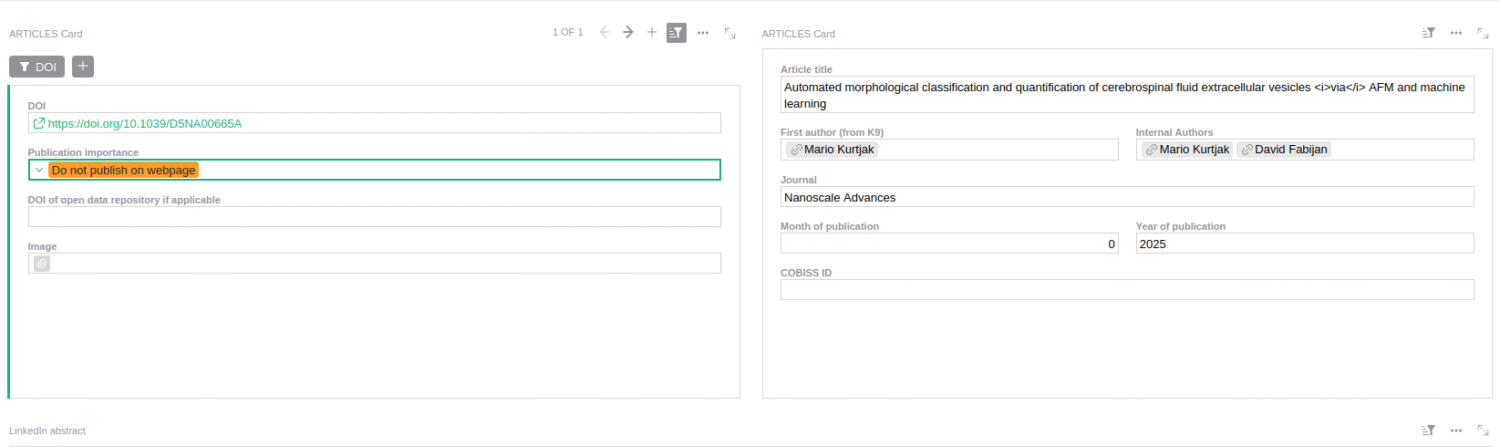

A custom-built lookup tool retrieves and normalizes publication metadata – or DOIs – with custom handling for institutional proxy requirements. An automated service checks when members haven’t submitted their monthly reports, and sends specific reminders rather than blanket requests.

The frontend view of the publication metadata lookup tool, showing article title, authors, journal, and time of publication.

An example email generated using the API to send specific reminders to submit monthly reports.

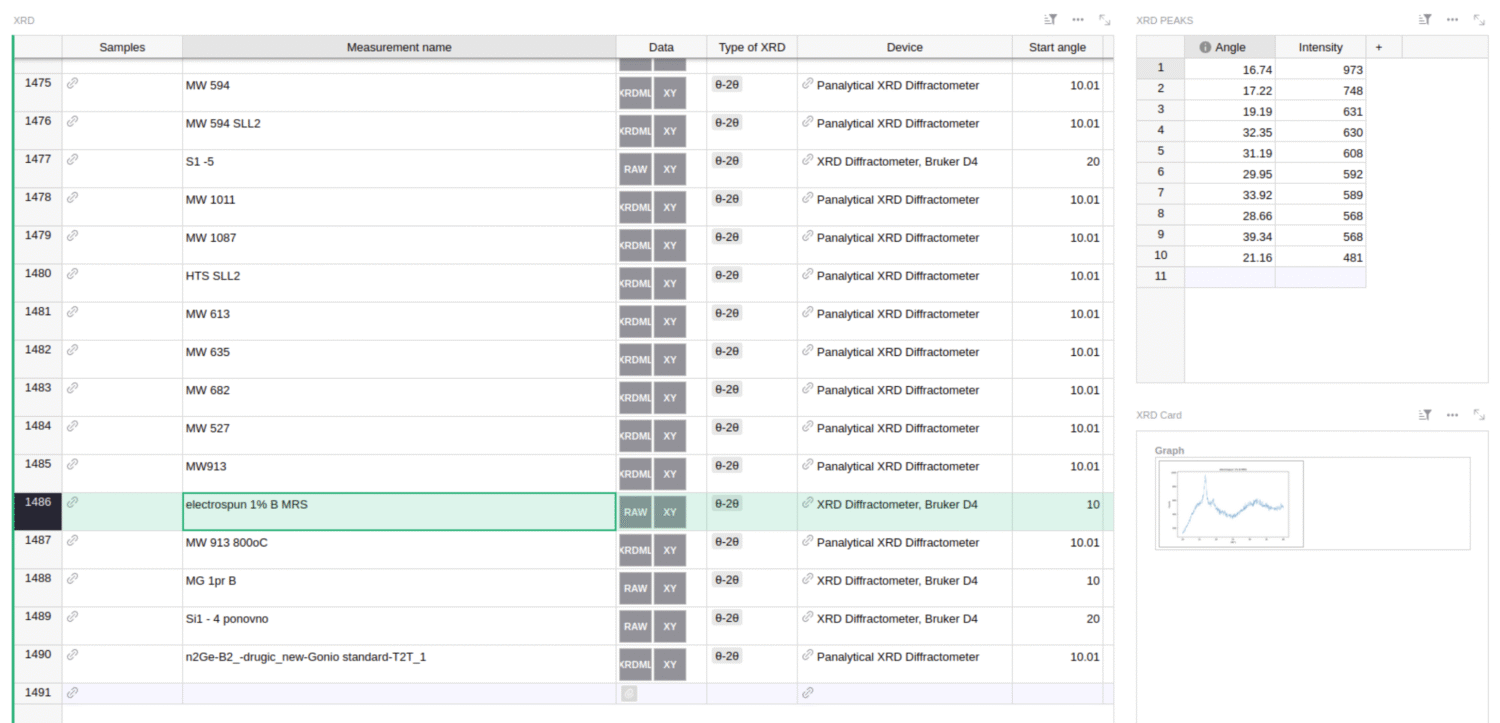

3. Instrument data integration across technological generations

Research labs accumulate instrumentation over time, creating a functional museum of analytical technology. The department operates equipment spanning five decades, each with proprietary data formats and varying degrees of network connectivity.

David established a standardized Sample ID protocol — the “Grist code” — that researchers include as metadata during data acquisition. A polling service monitors instrument outputs, parses format-specific data structures, uploads both raw and processed results to Grist, automatically associates measurements with the corresponding Sample records, and generates standardized visualizations.

This pipeline now operates for around 10 instruments. The approach provides unified data capture regardless of instrument vintage and eliminates the common problem of orphaned data files on individual workstations.

Table tracking data connected to Jozef Stefan Institute’s X-ray machine.

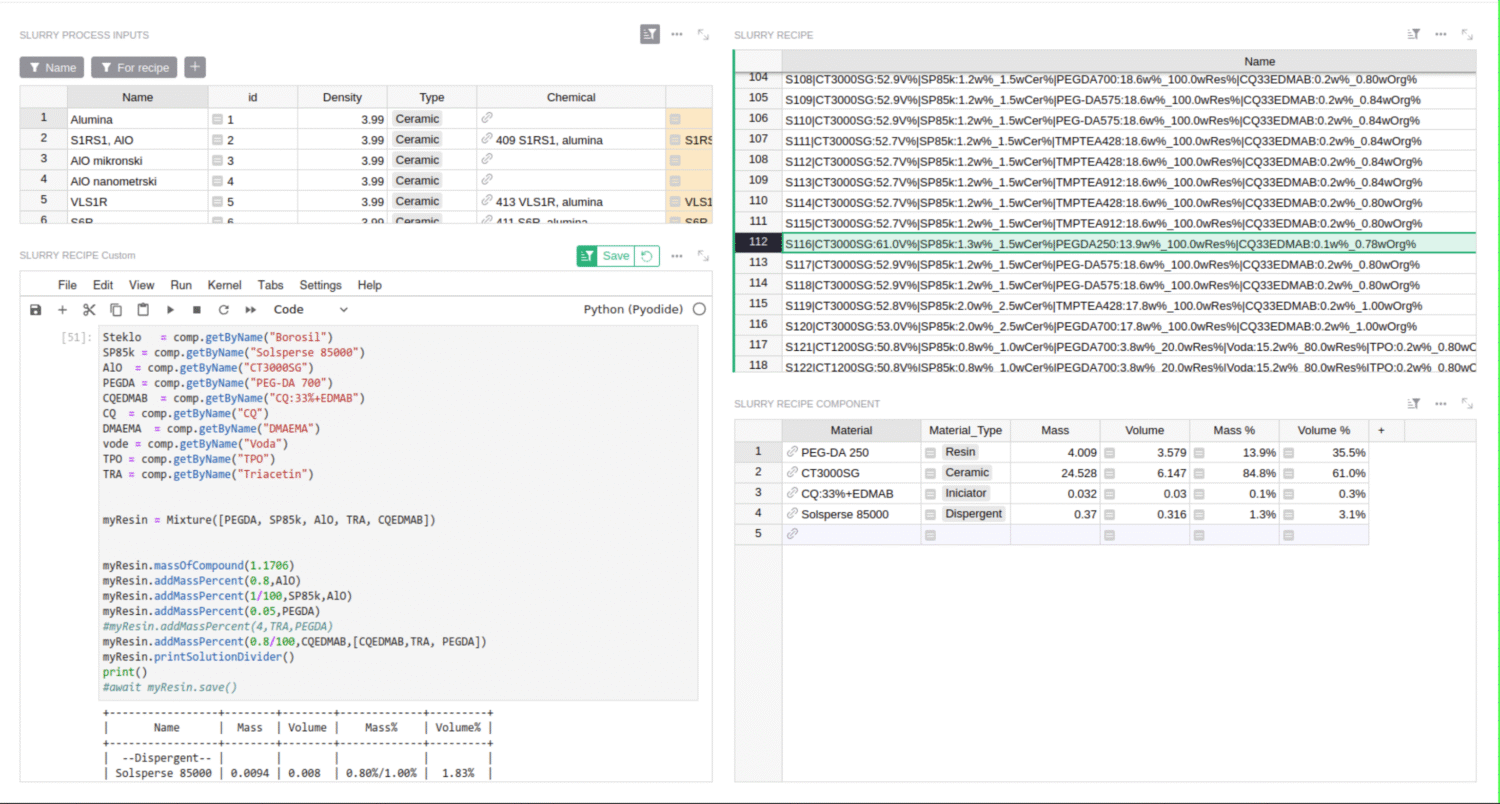

4. Codifying tacit knowledge through computational tools

And here’s David’s favorite part of “‘Gristifying'” laboratory operations: students learning to prepare ceramic slurries (material recipes for 3D printing) typically receive informal guidance about material ratios that “work well.” Hardly a foundation for reproducible science.

David leveraged Grist’s integrated Python environment to develop process calculators that create these 3D printing recipes automatically. Users input target material properties and receive precise component masses and volumes calculated through linear algebraic methods.

Similar calculators now support laser ablation processes for thin film deposition and classical sintering workflows. The tools accelerate training for new lab members while improving experimental consistency.

David’s complex ceramic slurry tool, complete with material names, mass, volume, and composition within each recipe.

Measurable outcomes

The ultimate time savings of the Advanced Materials Department’s migration to Grist are substantial. Automated SDS processing alone recovers hundreds of hours previously sacrificed to manual lookups and data entry. Instrument data pipelines eliminate recurring file location problems. Process calculators reduce the learning curve for new students making 3D printed ceramics.

Those 1,500 chemicals are now labeled, scannable, and linked to current safety data. Instrument measurements automatically connect to Sample IDs no matter how old the device is. That’s actual traceability instead of hoping someone remembers. Compliance reporting improved without adding more meetings or making people fill out more forms.

“It saves hundreds of man hours in the end.”

–David Fabijan

Encouraging adoption

Younger lab members adopted the systems readily. Senior researchers were, in David’s assessment, “politely skeptical” initially, then embraced Grist once functionality became apparent.

David’s trick was making tools that are useful right away. Processes that make your work easier today get used, while post-hoc data entry that helps management later gets ignored. David focused on calculators and automations that solved immediate problems for researchers, not systems that would theoretically generate better reports six months from now.

Why this approach works

Most commercial lab management platforms optimize for standardized, repetitive workflows. Research environments often require the opposite. Flexibility to accommodate evolving methodologies, diverse analytical techniques, and shifting research priorities need their supporting software to be an advantage, not a hindrance.

Grist’s data model is built to handle rapid iteration. Tables, relationships, and interface components evolve alongside individual research workflows through direct collaboration with end users.

Grist’s API enables it to function as the lab’s integration hub – QR label generation, SDS parsing, instrument data ingestion, and reporting automation using the same consistent interface. Native Python support enables sophisticated calculations without additional applications.

The self-hostable and open-source foundation of Grist satisfies institutional requirements, such as data sovereignty for compliance and unusual network setups like IPv6-only subnets, proxies, and air-gapped instrument networks. Architectural flexibility that addresses real constraints.

Check out David’s GristCon 2025 presentation and GitHub for his repositories and Grist contributions.

“The biggest benefit of all is the dynamic nature of Grist. Things that would otherwise take a few days or weeks of back and forth with the software provider can be added in a matter of minutes.”

–David Fabijan

Grist and multidisciplinary research teams

Many research labs operate with similarly variable data management practices. Shared network drives, inconsistently maintained spreadsheets, or critical knowledge known only by specific individuals isn’t negligence, but the result of prioritizing research over infrastructure in resource-constrained environments. That said, there’s often a better way.

What makes the difference in many Grist deployments is role-based access, avoiding unnecessary duplication, column hiding, or requiring that all users to navigate irrelevant information to find what they need. Researchers see their data, managers see aggregate views, students see training materials, external users see a limited slice – all from the same underlying tables.

Ready to digitize your lab?

If your lab currently manages data and operations through complex spreadsheets or limiting research-focused platforms, a more flexible and robust relational platform exists.